- The Human Guide

- Posts

- MiniMax just dropped the most usable AI yet

MiniMax just dropped the most usable AI yet

The $0.08 model making Claude look expensive

Hello, PromptEdge

Today, you are going to learn about a powerful yet affordable AI model that makes Claude look expensive.

M2 by MiniMax might be the most usable AI model ever built.

MiniMax just launched M2 a new frontier model built natively for agents and code. It’s fast, sharp, and cheap. And it’s making even Claude look overpriced.

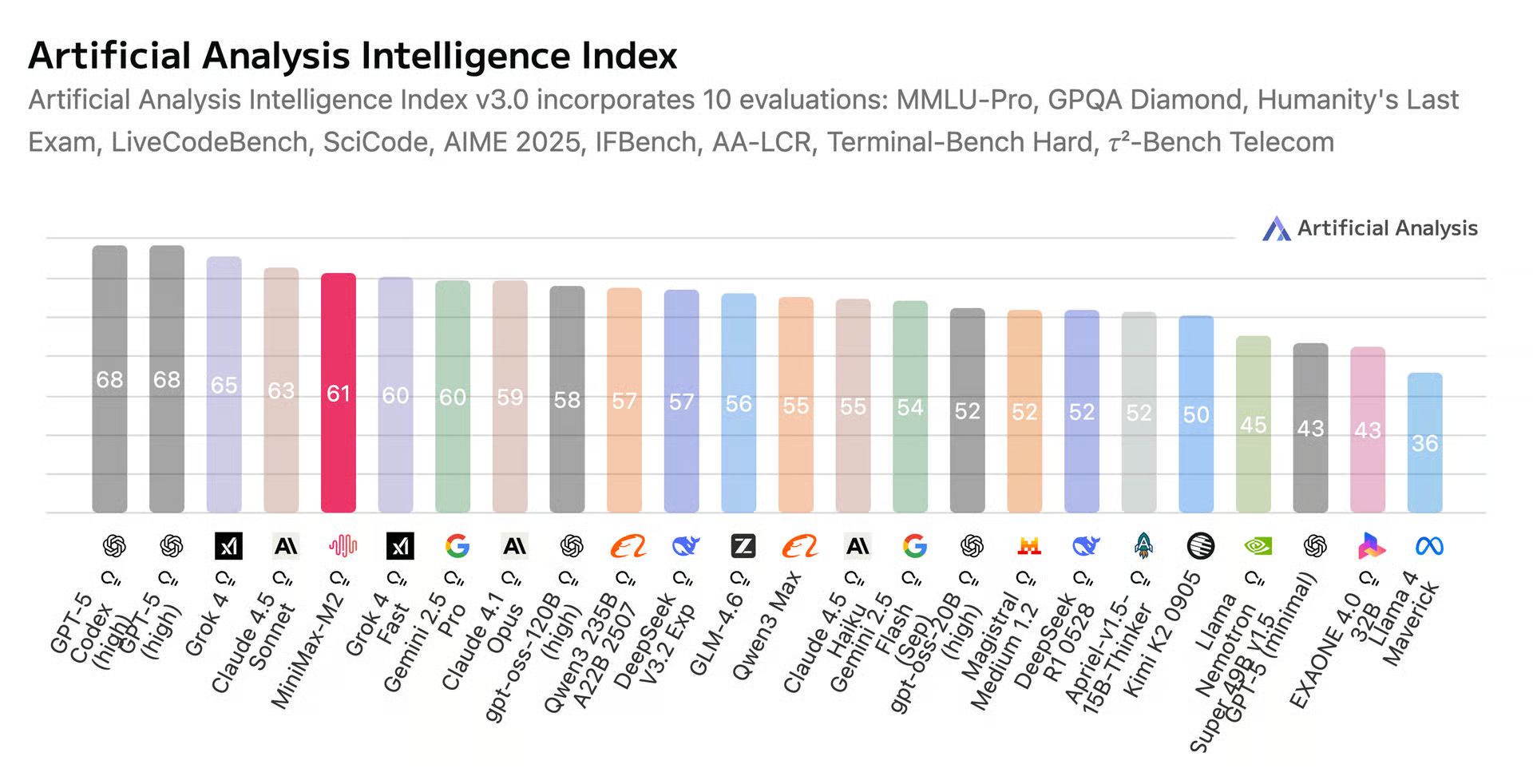

MiniMax-M2 ranks Top 5 globally for math, science, and programming, according to Artificial Analysis. It runs full dev workflows edit, debug, fix from a single prompt.

On benchmarks like Terminal-Bench and SWE-Bench, it outperforms most frontier models. It executes multi-tool tasks across shell, browser, and MCP servers autonomously.

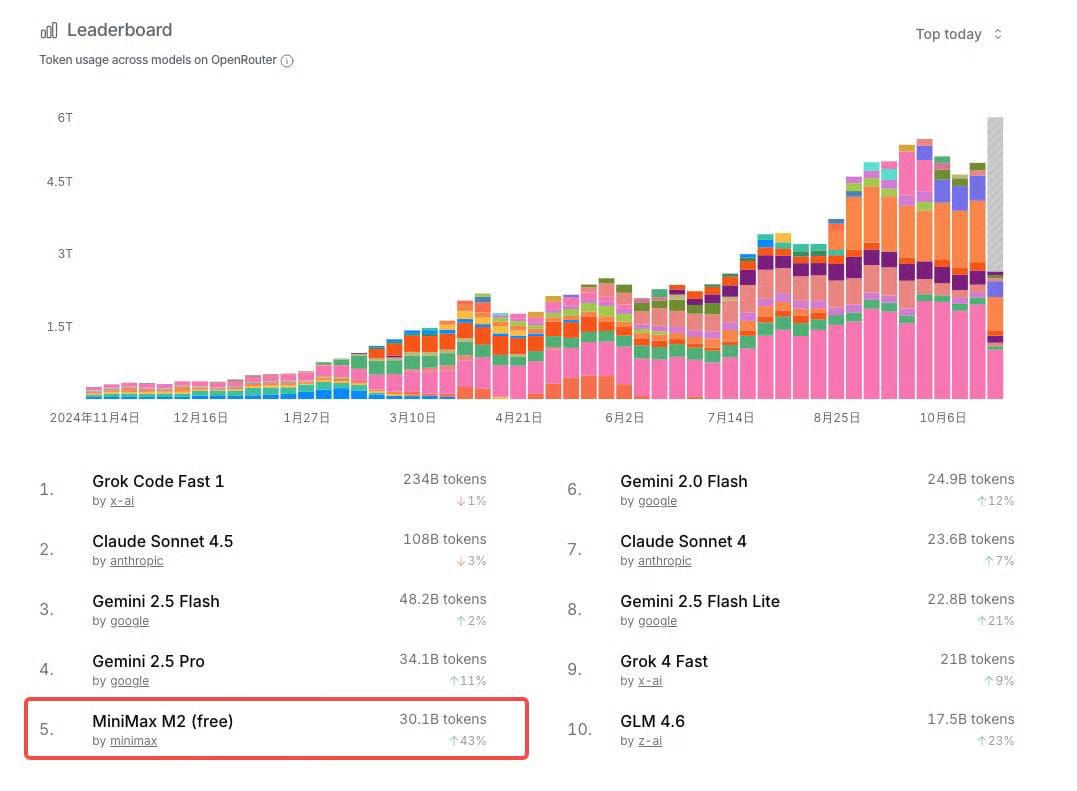

Token Usage among open-source models on OpenRouter

It’s #2 worldwide in deep search and multi-hop reasoning, just behind GPT-5.

You get 200k context, 128k output, and 100 TPS at 8% the cost of Claude.

Here’s what I think:

MiniMax-M2 isn’t just another “cheap alternative.” It’s what happens when a model is trained to actually be used not just demoed.

If agents are the future, MiniMax-M2 might be the model that gets us there.

Technical document: Hugging Face & GitHub

How to use MiniMax-M2:

MiniMax Agent, powered by MiniMax-M2, is now fully open to all users and free for a limited time: Try it here

The MiniMax-M2 API is now available on the MiniMax Open Platform, also free for a limited time: Check it here

MiniMax-M2 has been open-sourced and is now available for local deployment.